It may be a good idea to check it out if you are not comfortable with Random Variables & Distributions. Some time back I wrote an article called “ But what is a Random Variable?”. Why we are interested in the above quest (keyword here - “long-run”) is because we have brought Random Variables (and hence uncertainty) in the mix here and therefore we need to measure the central tendency of our surprise. The next quest for us is to formulate “ How surprised I am going to be in the long run ?” In other words, we can improve our intuition on the calculation of the surprise function - which our common sense would say must be an addition and not a product (multiplication) of event probabilities by realizing that multiplication can be viewed as addition by working on the logarithm of the probabilities involved. The logarithm function helped in bringing the additive aspect of surprise home for us. So, the surprise is inversely proportional to the logarithm of the probability of an event (Random Variable). Next, let’s try to formulate it mathematically. it’s a measure of uncertainty!īy the grace of Aristotle’s logic, you can appreciate that we have established that information (surprise) and uncertainty (probability) have a relationship going on. Rarer the event, the more surprised you are! And what is probability? …. Your surprise is inversely proportional to the probability (chance) of an event happening. The benefit of thinking in terms of surprise is that most of the time with “information” we think very binarily - either I have the information or don’t, whereas “surprise” helps bring a notion of degree of variability. there is no information in this statement but if I tell you that the world will end tomorrow you would be very surprised (… at least very sad, I hope ! 😩)

E.g., if I tell you that SUN will rise tomorrow you would say - meh! no surprise i.e. Now information can also be seen as a “surprise” albeit the amount by which you get surprised will vary.

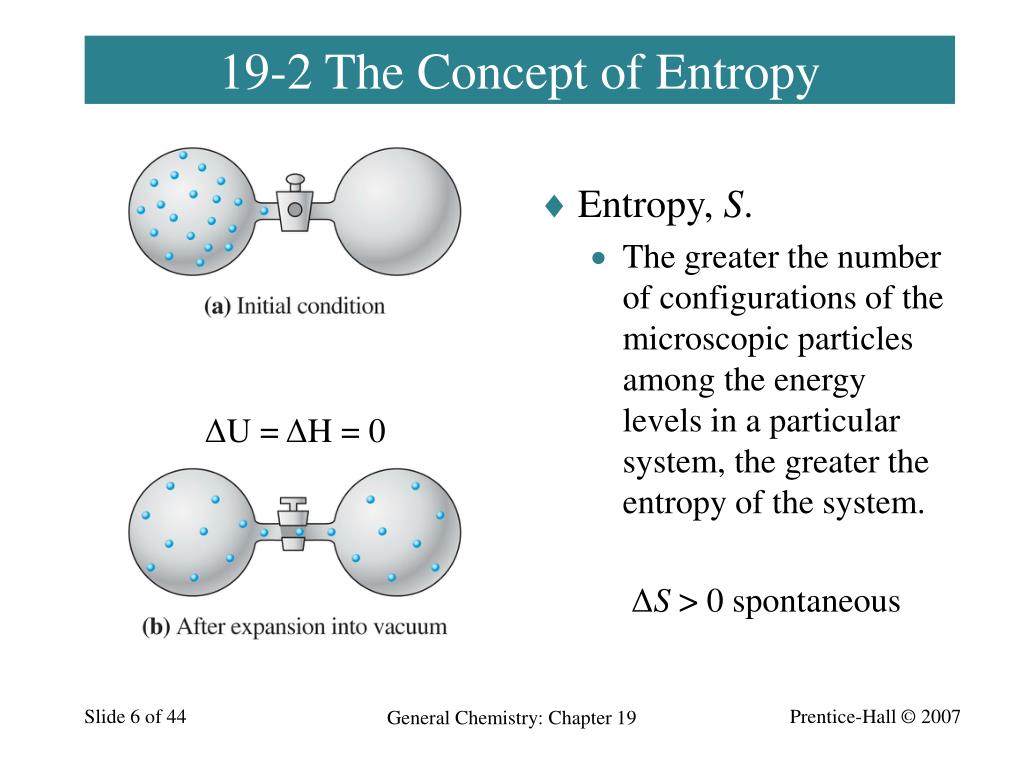

I explain this with the help of examples from an article by James Clear (Author of Atomic Habits). To understand these contexts let’s first check out disorder and its relation with entropy. However, the truth is all of the above-mentioned 3 perspectives are correct given the appropriate context. We can not make up our minds here as far as the definition is concerned. So now I have confused you more - entropy is not only the missing energy and the measure of disorder but it is also responsible for the disorder. We have all accepted this law because we observe and experience this all the time and the culprit behind this is none other than the topic of this writeup - yup, you got it, it’s Entropy! Rephrasing this obnoxious title into something a bit more acceptableĪnything that can go wrong, will go wrong - Murphy’s Law So what is it - missing energy, or a measure, or both? Let me provide some perspectives that hopefully would help you come to peace with these definitions. Entropy is a measure of disorder or randomness (uncertainty).Entropy is the missing (or required) energy to do work as per thermodynamics.Based on this result, you can notice that there are two core ideas here and at first, the correlation between them does not seem to be quite obvious.

0 kommentar(er)

0 kommentar(er)